Probability Metrics and Determinism

Above we stated that informally, something is predictable to the degree that it can be known in advance. So there must be a model of that something, and a metric for measuring that degree—i.e., making predictions about it. We have briefly overviewed the common frequentist theory of predictability, and two alternatives (Bayesian, Dempster-Shafer) which are far superior for making predictions about dynamically real-time actions and systems.

Next, book Chapter 3 summarizes the issue of predictability metrics.

First, of course our attention is on the topic of formal predictability, excluding the other contexts in which determinism in particular plays a role—notably philosophy (human free will, etc.).

There is an all but universal serious misunderstanding within the real-time practitioner and research communities confusing predictability with determinism and deterministic—seemingly without giving the issue any thought, much less having any understanding of probability theories or other formal metrics for predictability.

“I expose these contradictions

so that they may excite susceptible minds of my readers to search for truth,

and that these contradictions may render their minds more penetrating

as the effect of that search.”

— Peter Abelard, Sic et Non

A first principle of predictability is that it is an expanse. One end-point is maximum predictability—i.e., deterministic (known with absolute certainty). The other end-point is minimum predictability (which is maximum non-determinism). The rest of the continuum is degrees of predictability (degrees of non-determinism). A metric for predictability (or non-determinism) depends on the particular predictability model appropriate to its application.

The easiest approach to beginning to think about predictability metrics is with a model based on the frequentist theory of probability’s probability density functions. There is a continuum of probability density functions, ranging from the most predictable to the least predictable.

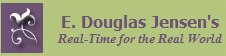

The maximum predictability end-point of that continuum is obviously the constant distribution—e.g., the action completion time is deterministic (known absolutely in advance), as aspired to by conventional real-time computing communities:

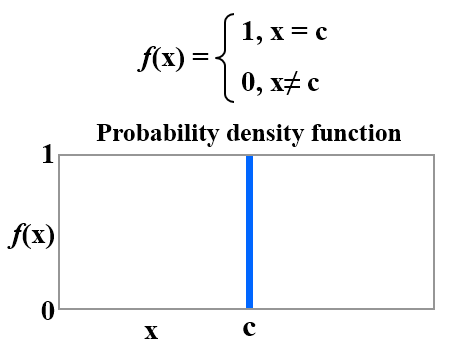

The minimum predictability end point would obviously seem to be the uniform distribution—e.g., the action is equally probable to complete at any time over the range of the function, and thus is maximally non-deterministic:

In this model, everywhere between the end points on the predictability expanse (for the frequentist probability theory in this example) are probability distributions corresponding to degrees of non-determinism—e.g., about the action completion time and satisfaction.

That is an example of how characterization of the minimum predictability (maximally non-deterministic) end-point depends on the probability theory being used. Non-stochastic (e.g., Shannon entropy [ ]) and various other kinds of theories of predictability have their own expanse metrics and end-points [ ].

This shows that non-determinism is the general case of predictability (the whole continuum of probability distributions except determinism), and determinism is the special case end point of predictability (the deterministic distribution in stochastic and some other theories).